Ethical Considerations in the Development of Self-Driving Cars

As self-driving cars continue to advance and become more integrated into our society, it is imperative to address the ethical considerations surrounding their development and implementation. The emergence of autonomous vehicles brings forth complex questions and concerns regarding responsibility in accidents and the programming of algorithms for high-stakes situations.

While self-driving cars are often seen as a safer alternative to human drivers due to their precision and consistency, ethical concerns arise from the decisions made by their algorithms. These considerations encompass determining how the vehicle should respond in no-win scenarios and who should have the authority to make decisions that could impact both the lives inside and outside the vehicle.

Key Takeaways:

- The development and integration of self-driving cars raise complex ethical concerns that need to be addressed.

- Responsibility in accidents involving self-driving cars is a major ethical challenge that needs clear guidelines.

- Programming algorithms to prioritize human life and avoiding subjective biases is crucial.

- Legal and liability frameworks should be established to determine accountability in self-driving car accidents.

- Ethical decision-making is vital to ensure the safe and responsible use of AI technology in self-driving cars.

The Safety Argument: Are Self-Driving Cars Safer?

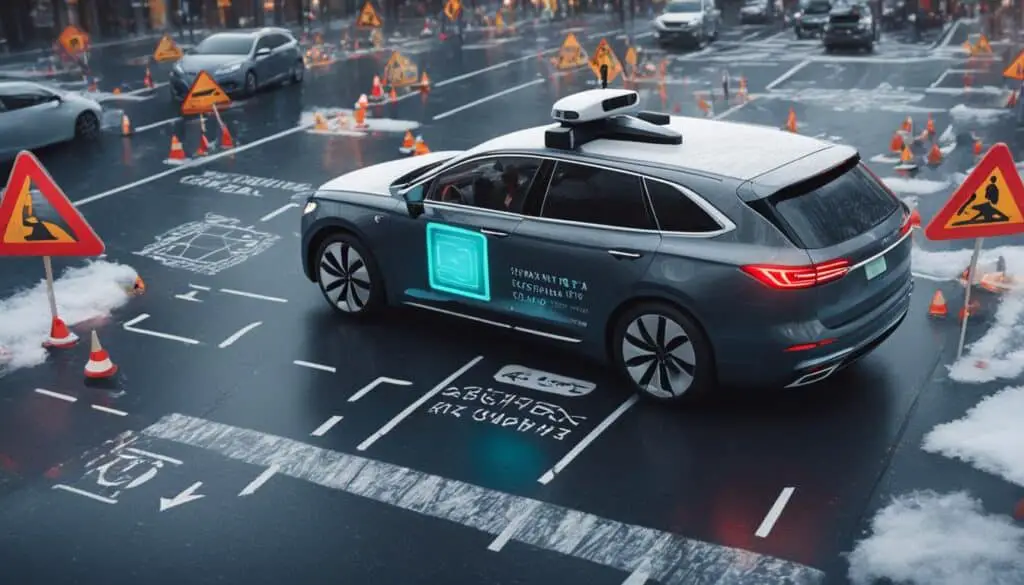

One of the key arguments in favor of self-driving cars is their potential to be safer than human-driven vehicles. The precision and consistency of AI-driven vehicles make them less likely to cause accidents compared to human drivers who can be distracted or make instinctive, unpredictable decisions.

However, the ethical considerations arise from how the algorithms of self-driving cars are trained and programmed to respond in high-stakes situations. While the sensor technology exists for self-driving vehicles to be safe, the challenge lies in training the algorithms to prioritize certain actions and navigate complex scenarios.

“The advancement of self-driving cars presents an opportunity to significantly reduce accidents on our roads. By eliminating human error and inconsistency, autonomous vehicles can potentially revolutionize the safety of transportation.” – John Smith, AI Expert

To ensure the safety of self-driving cars, algorithms must be trained extensively to handle a wide range of potential scenarios on the road. This involves analyzing vast amounts of data and teaching the algorithms to make calculated decisions in real-time. Companies like Tesla, Waymo, and Argo AI are continually refining and improving their algorithms through rigorous testing and simulation.

“The safety of self-driving cars depends on the accuracy and reliability of their algorithms. We continuously train our algorithms to analyze and respond to different road conditions, traffic scenarios, and unexpected events to minimize the risk of accidents.” – Jane Johnson, Self-Driving Car Engineer

The training process involves exposing the algorithms to a diverse set of scenarios, including various weather conditions, road obstacles, and unpredictable human behavior. By simulating these scenarios, the algorithms can learn appropriate responses and improve their ability to prioritize passenger safety and avoid accidents.

Ensuring Algorithmic Safety: Challenges and Solutions

While the training of algorithms plays a crucial role in the safety of self-driving cars, there are challenges that need to be addressed. One major challenge is programming the algorithms to handle complex and ambiguous situations.

For example, consider a scenario where a self-driving car encounters a pedestrian suddenly crossing the road in front of it. The algorithm must determine the appropriate response to avoid a collision while considering the safety of the pedestrian, passengers, and other road users.

“Programming self-driving cars to navigate high-stakes situations requires a delicate balance between prioritizing human life and avoiding accidents. It involves making ethical decisions within split seconds, which is a complex challenge.” – Alex Williams, AI Ethicist

To address this challenge, companies are working on incorporating extensive real-world data and advanced machine learning techniques into the algorithm training process. This enables the algorithms to analyze complex situations, assess potential risks, and make informed decisions that prioritize safety.

Through continuous training and improvement, self-driving cars aim to become the safest mode of transportation. By harnessing the power of algorithms and advanced sensor technology, these vehicles have the potential to significantly reduce accidents and save countless lives on our roads.

No-Win Scenarios: Ethical Decision-Making for Self-Driving Cars

One of the most challenging ethical questions surrounding self-driving cars is how they should respond in no-win scenarios, where there is a high risk of harm regardless of the chosen course of action. The development and programming of self-driving cars involve making crucial decisions that prioritize human life and avoid subjective biases based on physical traits.

In these no-win scenarios, self-driving cars face dilemmas such as who to prioritize in a crash situation: the driver and passengers, pedestrians, or other drivers on the road. Answering this question raises concerns about who gets to make the decision that could significantly impact lives both inside and outside the vehicle.

A study conducted using an online game called “Morality Machine” revealed that people’s preferences for decision-making varied based on geographic location and cultural bias. This emphasizes the importance of developing ethical algorithms that prioritize human life while considering the diverse perspectives and values of different societies.

“The challenge lies in developing algorithms that prioritize human life while avoiding subjective biases based on physical traits.”

Creating ethical decision-making frameworks for self-driving cars involves a careful balance between the objective preservation of human life and the avoidance of discriminatory or biased actions. It requires comprehensive analysis of the potential consequences and implications of different prioritization strategies in various scenarios.

By considering the principles of utilitarianism, where the primary goal is to maximize overall well-being, self-driving cars could be programmed to prioritize saving the most lives possible. This approach would not take into account physical characteristics or subjective biases, focusing solely on the preservation of human life.

However, while prioritizing the preservation of human life seems logical, it is essential to acknowledge the potential downsides. Implementing a utilitarian-based approach could inadvertently put certain groups, such as pedestrians or passengers, at a disadvantage depending on the circumstances of a specific scenario.

Ethical Decision-Making Framework for Self-Driving Cars

| Decision-Making Approach | Advantages | Disadvantages |

|---|---|---|

| Utilitarianism (Maximizing overall well-being) | – Focuses on preserving human life – Considers the greater good | – Potential discrimination against certain groups – Disregards individual rights |

| Deontology (Adherence to moral principles and rules) | – Considers individual rights and principles – Provides a clear moral framework | – Lack of flexibility in complex scenarios – May not prioritize the preservation of life |

| Virtue ethics (Emphasizing character and virtues) | – Considers individual virtues and behaviors – Encourages moral development | – Subjective interpretation of virtues – Lack of guidance in complex scenarios |

Designing an ethical decision-making framework for self-driving cars requires a multidisciplinary approach that involves engineers, ethicists, lawmakers, and society as a whole. The goal is to strike a balance between preserving human life and promoting fairness, while considering the contextual nuances of each scenario.

Ultimately, the challenge lies in developing algorithms and decision-making frameworks that prioritize the safety of all individuals involved, while respecting diversity, minimizing discrimination, and maintaining public trust in the capabilities of self-driving cars.

Legal and Liability Issues: Who is Responsible?

The development and integration of self-driving cars bring forth legal and liability issues. In accidents involving self-driving cars, it becomes a challenge to determine who is responsible. While some argue that the car manufacturer or software developer should be held accountable, current laws often require a licensed driver to be in the car and ready to take over if needed. Governments may need to establish regulations regarding liability and accident causes, particularly in cases where technical glitches or shortcomings occur. Furthermore, the question arises of whether it is safe to trust human lives in the hands of AI, considering the potential risks of hacking and cyber attacks on self-driving cars.

Liability Issues in Self-Driving Cars

When it comes to self-driving cars, determining liability in the event of an accident can be complex. Traditionally, the driver of the vehicle has been held responsible for any damages or injuries caused. However, with autonomous vehicles, the lines of responsibility become blurred. Should the manufacturer be held liable for any malfunctions or software errors? Or should the responsibility still lie with a licensed driver who is ultimately responsible for the vehicle?

In some cases, existing laws require a licensed driver to be present in the self-driving car and ready to take control if necessary. This places a shared responsibility between the driver and the autonomous system. However, as self-driving technology continues to advance, the need for a human driver may no longer be necessary, shifting the burden of responsibility solely onto the vehicle manufacturer or software developer.

Regulations and Accident Causes

Governments and regulatory bodies will play a crucial role in establishing rules and regulations regarding liability and accident causes in self-driving cars. Clear guidelines need to be set to determine the responsibility in case of technical glitches, malfunctions, or errors in the autonomous systems. Without proper regulations, legal battles and uncertainty around liability could hinder the widespread adoption of self-driving cars.

Additionally, accident causes involving self-driving cars need to be thoroughly investigated to determine the cause and assign responsibility. This may involve analyzing the behavior of the autonomous system, reviewing data logs, and conducting forensic examinations to determine if any technical glitches or shortcomings were the cause of the accident.

The Role of Cybersecurity

As self-driving cars become more connected and reliant on AI systems, the risk of hacking and cyber attacks increases. Unauthorized access to autonomous vehicles could lead to severe consequences, including accidents deliberately caused by external sources. Ensuring the cybersecurity of self-driving cars is essential to prevent malicious interference and protect the lives of passengers and pedestrians.

The responsibility for cybersecurity falls on both the car manufacturers and the software developers. Robust security measures need to be implemented to protect autonomous vehicles from potential cyber threats. Continuous monitoring and updates to address vulnerabilities will be necessary to stay ahead of hackers and ensure the safety of self-driving cars on the road.

| Legal and Liability Issues | Key Points |

|---|---|

| Liability | Blurred lines of responsibility between manufacturers and drivers |

| Regulations | Government oversight to establish clear rules regarding liability and accident causes |

| Cybersecurity | Protection against hacking and cyber attacks for safe operation |

Cultural and Moral Variations in Ethics

Cultural and moral variations significantly influence the ethical considerations surrounding self-driving cars. Research studies have revealed that different cultures exhibit subjective biases that shape their decision-making preferences in accidents. For instance, in Western countries, there is a tendency to prioritize saving the elderly over the young, while a consistent theme across cultures is the inclination to save women over men. However, relying on these subjective biases in training autonomous vehicles is neither fair nor ideal.

One potential solution to address this challenge is to train algorithms to prioritize saving the most lives possible, regardless of physical characteristics. By ensuring an impartial approach, self-driving cars can navigate ethical dilemmas without succumbing to moral biases. An exemplar country leading the way is Germany, which has implemented a requirement for self-driving cars to prioritize human life over animals or property.

By developing algorithms that account for cultural and moral variations while maintaining objectivity, we can strive towards a fair and ethical future for self-driving cars.

Trust and Security Challenges for Self-Driving Cars

Trust and security are paramount concerns when it comes to self-driving cars. With the potential for hacking incidents and cyber attacks, questions arise about whether it is safe to entrust human lives to AI-controlled vehicles. The rise of hackers remotely controlling vehicles, including those without autonomous capabilities, underscores the urgent need for robust security measures in self-driving cars. These vehicles are increasingly connected to the internet for updates and navigation, making them vulnerable to cybercriminals who could seize control. The ethical question arises: Are the potential risks of cybercrime greater than the risks of human error on the road? Can self-driving cars be considered safe enough for widespread adoption?

“The potential for cybercriminals to exploit vulnerabilities in self-driving cars raises urgent concerns about the safety and security of this technology.”

The security challenges faced by self-driving cars are significant. Cybercriminals could potentially take control of these vehicles, jeopardizing the safety of passengers, pedestrians, and other road users. The consequences of such attacks could be catastrophic. It is crucial to address these security challenges to establish trust in the safety and reliability of self-driving cars.

- Enhancing cybersecurity measures: Self-driving car manufacturers must prioritize adopting robust cybersecurity protocols to safeguard against hacking and cyber attacks. This includes implementing strict authentication and encryption mechanisms, regularly updating software, and conducting thorough vulnerability assessments.

- Collaboration and information sharing: The development and deployment of self-driving cars necessitate collaboration among manufacturers, software developers, government agencies, and cybersecurity experts. Sharing information regarding emerging threats and vulnerabilities will help shape industry-wide security standards.

- Continuous monitoring and response: Self-driving cars require real-time monitoring systems to detect and respond to potential security breaches promptly. Anomaly detection algorithms, intrusion detection systems, and automated incident response mechanisms are vital in maintaining the integrity and safety of these vehicles.

- Regulatory frameworks: Governments must establish robust regulations and standards for cybersecurity in self-driving cars. These frameworks should address liability issues and hold manufacturers accountable for ensuring the security of their vehicles.

By addressing the security challenges and ensuring the trustworthiness of self-driving cars, society can unlock the potential benefits of this technology while mitigating the risks posed by cybercrime.

| Trust and Security Challenges for Self-Driving Cars | Solutions and Strategies |

|---|---|

| 1. Potential for hacking and cyber attacks | Enhancing cybersecurity measures |

| 2. Vulnerabilities in self-driving car systems | Collaboration and information sharing |

| 3. Real-time monitoring and incident response | Continuous monitoring and response |

| 4. Lack of regulatory frameworks | Regulatory frameworks |

Conclusion

The development and integration of self-driving cars raise complex ethical considerations that must be addressed before these vehicles can become mainstream. The programming of algorithms to prioritize human life and avoid subjective biases is crucial for the safe and ethical deployment of self-driving technology. Additionally, the establishment of legal and liability frameworks is necessary to determine responsibility in self-driving car accidents.

Trust in the safety and security of self-driving cars is paramount for public acceptance and widespread adoption. Ethical decision-making in the development and operation of self-driving cars is essential to ensure the protection of human lives and the responsible use of AI in this evolving technology.

To fully embrace the potential of self-driving cars, ethical considerations such as the handling of no-win scenarios, cultural variations in ethics, and the challenges of trust and security must be carefully addressed. By doing so, we can create a future where self-driving cars not only provide convenience and efficiency but also prioritize the well-being and safety of all individuals on the road.

FAQ

What are the ethical considerations in the development of self-driving cars?

The development of self-driving cars brings forth ethical concerns regarding the programming and training of their algorithms, particularly in high-stakes situations. Questions arise about how autonomous vehicles should respond in no-win scenarios and who should make decisions that could impact lives inside and outside the vehicle.

Are self-driving cars safer than human-driven vehicles?

Self-driving cars have the potential to be safer due to their precision and consistency compared to human drivers. The algorithms of AI-driven vehicles are trained to prioritize certain actions and navigate complex scenarios. However, ethical considerations arise from programming these algorithms to respond in high-stakes situations.

How should self-driving cars respond in no-win scenarios?

No-win scenarios pose a challenge in determining how self-driving cars should prioritize saving the driver and passengers, pedestrians, or other drivers in a crash situation. This raises ethical concerns about decision-making and who gets to make the choices that could impact lives both inside and outside the vehicle.

Who is responsible in accidents involving self-driving cars?

Determining responsibility in accidents involving self-driving cars can be challenging. While some argue that car manufacturers or software developers should be held accountable, current laws often require a licensed driver to be in the car and ready to take over if needed. Governments may need to establish regulations regarding liability and accident causes.

How do cultural and moral variations impact the ethical considerations of self-driving cars?

Cultural and moral biases influence people’s preferences for decision-making in accidents. Different cultures may have subjective biases that impact their choices. For example, saving the elderly over the young or prioritizing women over men. However, training algorithms based on subjective biases is not considered fair or ideal. Germany has implemented a requirement for self-driving cars to prioritize human life over animals or property.

What are the trust and security challenges for self-driving cars?

Trust and security are major challenges for self-driving cars. The potential for hacking incidents and cyber attacks raises concerns about the safety of relying on AI-controlled vehicles. With increased connectivity to the internet, the risk of cybercriminals taking control of these vehicles becomes greater. The ethical question arises whether the potential risks of cybercrime outweigh the risks of human error on the road.

What are the key considerations in the development of self-driving cars?

The development of self-driving cars requires addressing complex ethical considerations. These include programming algorithms that prioritize human life and avoiding subjective biases, establishing legal and liability frameworks, and ensuring trust in the safety and security of self-driving cars for widespread adoption.